Augmented reality apps made hands-on interactive

Interactive virtual object handling for everyone, everywhere, with just a phone. Open source library for Android with Unity, and other platforms with hardware options.

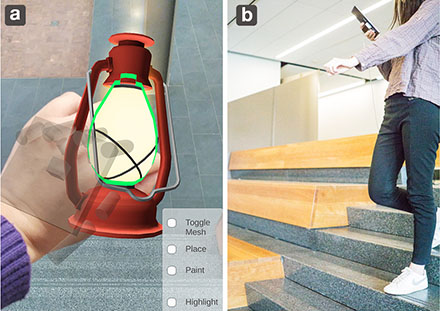

Try on AndroidOther FormatsPortal-ble is portable augmented reality that turns the phone into a portal into a virtual world. The virtual and real are merged into a single view, so you can interact with both virtual and real objects with your hand: sketching, manipulating, and throwing them.

We're not talking about gestures in the air, but rather your hands in the space where the virtual object would be.

Which coffee in the following image is real? Actually, both cups are virtual, so grab them both. And the chair with the red seat is virtual, but the gray ones are real. Now pick up and move around any of the chairs.

The Portal-ble library handles the interaction for your app. It tracks your users' hand so they can grab and handle virtual objects. The software automatically helps users better perceive depth, judge how to grasp objects, and handle them, by overcoming our innate human imprecision and tracking inaccuracies.

The phone-only version of Portal-ble runs on Android and requires nothing else, while an extended version uses a Leap Motion sensor and runs on Android and iPhone. Also partly runs on the 2021 Snap Spectacles (in beta testing). We have an open source "gratitude license" for non-commercial use.

No headset. No controllers. No gloves. Just your hand and your phone.

| Format | Object Handling | Object Throwing | Drawing | Platform |

|---|---|---|---|---|

| Phone Only | Full | Partial | No | Android |

| Phone + Depth Sensor | Full with Micro-Precision* | Full | Full | Android, iPhone |

| Phone + Wrist Display | Partial | No | Full | Android |

| Spectacles | Partial | Full | No | iPhone |

Want to be notified about updates?

Receive updates about software library releases, new feature demos, or additional hardware support.

Phone Only

The basic Portal-ble library requires only an Android smartphone. The base functionality lets you directly manipulate virtual objects in a smartphone augmented reality (AR) environment with your bare hands. Basically, it's hand tracking so you can reach into augmented reality to play with virtual things in 3D space.

The Portal-ble GitHub repository has the documentation to set up and run the portal-ble example applications and build your own Unity application that uses Portal-ble. The computer vision is processed by MediaPipe, which is connected to Unity using our wrapper library; the wrapper library is also included in the repository.

Android version 7.0 (Nouget) or above is supported, and Android versions up to version 12 have been tested. iOS support for phone-only mode is still under development, and may be possible in the future without the depth sensor.

View ReadmePhone + Depth Sensor

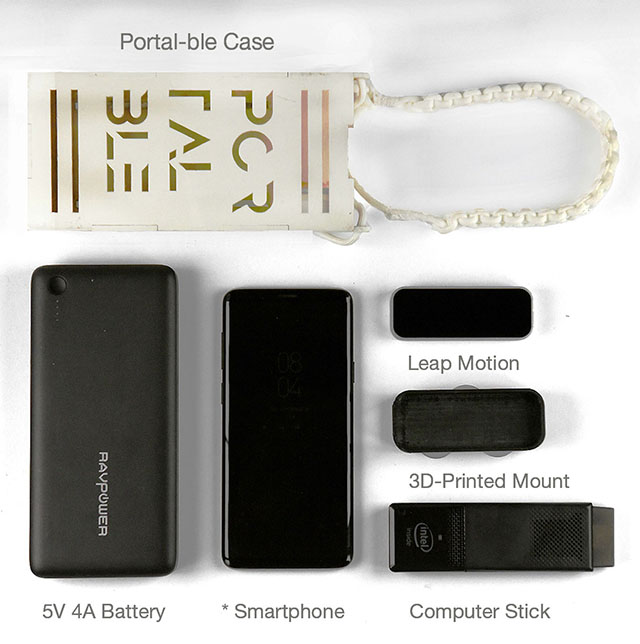

Portal-ble can work in a Phone + Depth Sensor configuration, by attaching a Leap Motion depth sensor to the back of the phone with a 3D-printed mount. The Leap Motion can be purchased off-the-shelf as existing consumer hardware.

This additional hardware is not needed for the basic functionality, but this extension upgrades the capabilities of Portal-ble by enabling higher precision object manipulation, supporting throwing and sketching, and runs on both Android and iOS rather than just Android.

The Leap Motion connects to an Intel compute stick that wirelessly transmits hand positions to a phone. A longer description of the Phone + Depth Sensor version of Portal-ble and its evaluation is published at UIST 2019, the ACM Symposium on User Interface Software and Technology.

A more recent version of the software for this Phone + Depth Sensor format, which extends the previously published version and runs on more recent Android and iOS devices, features higher precision object manipulation for smaller objects. Source code and detailed instructions for assembling Portal-ble in this most recent Phone + Depth Sensor version is now available and can be licensed by contacting us.

Phone + Wrist Display

An experimental version of Portal-ble adds a secondary display on the wrist to provide an additional perspective of the virtual objects and enable multi-display free-hand interactions. The wrist display extends the smartphone's canvas perceptually, allowing the authors to work beyond the smartphone screen view, mitigating the difficulties of depth perception and improves the usability of direct free-hand manipulation.

This version of Portal-ble has focused on sketching interactions, and three artists used an autobiographical design approach to create a new form of 3D art, as reported in the paper.

Spectacles

Portal-ble can run on devices that are not smartphones, and is intended for other mobile augmented-reality-capable formats in the future. One such format is augmented reality glasses, such as the 2021 Snap Spectacles, a pair of augmented reality glasses made by Snap that are lightweight and mobile, ideal for Portal-ble.

Techniques from Portal-ble are applied to our demo applications running on the Spectacles. Our first demo application has incorporated partial object manipulation, and naturalistic throwing interactions.

If you have a pair of the 2021 Spectacles, you can pin and then run our Pokemon CatchAR lens on your own device. The lens also runs on iOS and Android, and tens of thousands of people have already tried it on their smartphones, but the throwing physics is a bit off on phones.

We are currently working on improving the lens to feature a waypoint navigation system to find the Pokemon, and adding a game mechanic. Subscribe to updates to hear when that is released!

Portal-ble is led by Jing Qian and Jeff Huang, with contributions from people with backgrounds in computer science, data science, and design. Contributors in alphabetical order include Angel Cheung, Benjamin Attal, Enmin Zhou, Haoming Lai, Ian Gonsher, James Tompkin, Jiaju Ma, John Hughes, Meredith Young-Ng, Tongyu Zhou, Xiangyu Li.

This work is funded by the National Science Foundation and by a gift from Pixar. Spectacles provided by Snap.